AI-Powered Automated Evaluation System

TensorLearners partnered with a higher-education services provider to modernize and scale their academic evaluation workflows by designing and deploying an AI-Powered Automated Evaluation System.

Objective

The primary objective of this engagement was to enable a higher-education services provider to scale academic evaluation operations without increasing manual effort, cost, or grading variability. As student volumes grew and academic cycles became more compressed, the institution needed a solution that could deliver consistent, fair, and auditable evaluations at scale.

TensorLearners modernized the evaluation lifecycle by implementing an AI-assisted, rubric-driven assessment framework aligned with official academic standards, augmenting human evaluators with a deterministic system that ensures consistent correction logic, scoring criteria, and validation across all submissions. By implementing this solution, TensorLearners aimed to:

Reduce evaluation turnaround time during peak academic periods

Eliminate inter-evaluator variability through standardized rubric enforcement

Improve transparency and audit readiness of grading decisions.

Enable scalable evaluation workflows without proportional increases in staffing or operational cost

Preserve academic rigor while introducing automation into critical assessment processes

Core Problem

Educational institutions operate at the intersection of scale, fairness, and academic rigor. As submission volumes grow and evaluation cycles tighten, traditional human-only grading models struggle to deliver consistent, transparent, and timely outcomes.

This AI-powered evaluation system was designed to address the core operational problem faced by institutions how to evaluate large volumes of academic submissions accurately, consistently, and auditably—without overburdening evaluators or compromising academic standards.

1. Manual, Time-Intensive Evaluation: Academic assessments rely heavily on manual review, resulting in long turnaround times and evaluator fatigue—especially during peak academic cycles.

2. Inconsistent Grading Standards: Subjective interpretation of rubrics across evaluators leads to grading variance, impacting fairness, credibility, and student trust.

3. Scalability Constraints During Peak Loads: Evaluation capacity does not scale with submission spikes, creating bottlenecks during examinations and result publication windows.

4. Limited Transparency and Audit Readiness: Traditional grading workflows lack structured reasoning and traceability, making audits, reviews, and compliance checks difficult.

Our Solution

TensorLearners designed an AI-Powered Automated Evaluation System that acts as a disciplined digital evaluator—executing rubric-based grading consistently, transparently, and at scale. The system enables:

- Automated ingestion of academic rubrics and student submissions

- Structured parsing and chunking of documents

- Context-aware semantic retrieval of rubric criteria

- AI-driven evaluation aligned strictly with official correction rules

- Deterministic scoring and rule-based validation

- Human-in-the-loop review for flagged discrepancies

Rather than replacing academic judgment, the system augments evaluators by eliminating repetitive work and enforcing standardized grading logic.

Solution Architecture

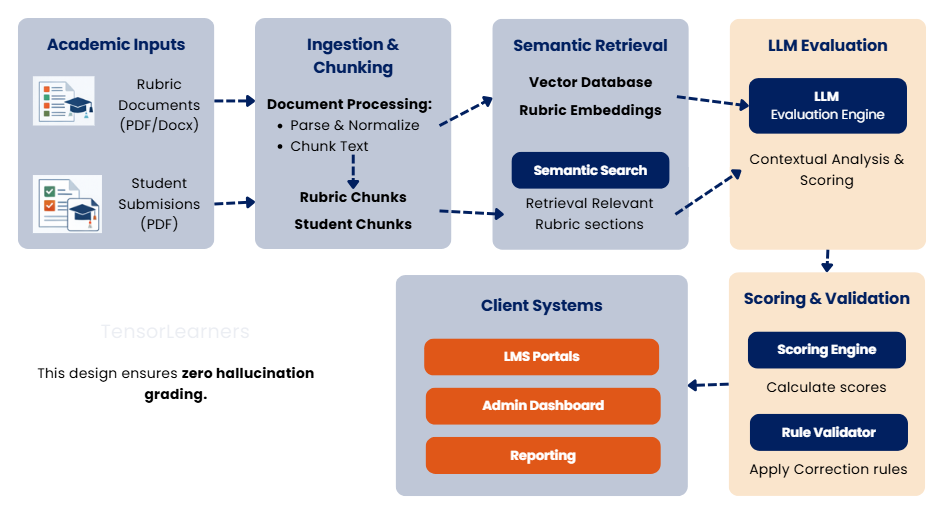

The platform follows a modular, enterprise-grade AI architecture, ensuring explainability, scalability, and governance.

Technology Stack

This system is built on a modular, end-to-end technical architecture that separates ingestion, intelligence, retrieval, orchestration, and infrastructure concerns into well-defined layers. Each layer is independently scalable, governed, and auditable ensuring the system delivers consistent, explainable outcomes while meeting enterprise standards for security, reliability, and performance.

Ingestion Layer

- Automated rubric and submission ingestion

- Secure batch upload pipelines

- PDF parsing and normalization

Intelligence Layer

- LLM-based qualitative reasoning

- Rubric-aligned evaluation logic

- Deterministic scoring to avoid generative drift

Retrieval-Augmented Generation (RAG)

- Semantic embeddings using OpenAI models

- Pinecone vector database for rubric retrieval

- Context-aware evaluation grounded in official rules

Orchestration & Agent Logic

- Multi-step evaluation workflows

- Modular agent design (retrieve → analyze → score → validate)

- Compatible with LangChain and LangGraph

Cloud & Infrastructure

- Hosted on Amazon Web Services

- Horizontally scalable compute

- Secure secrets and access management

Data & Reporting

- Structured, tabular outputs

- Exportable reports (CSV / dashboards)

- Audit-friendly evaluation traces

Outcome Delivered

The system delivers a fully automated, rubric-based evaluation engine that ensures consistent grading aligned with official correction rules. Each assessment is accompanied by transparent, rubric-referenced explanations, enabling full traceability and audit readiness.

Deployed in a production environment with live academic usage, the system operates at scale with reliability and consistency behaving like a disciplined senior evaluator that delivers expert judgment continuously, without fatigue.

Ready to Grow Your Business?

Embark on a transformative journey with us to harness the power of AI and accelerate your success. Let’s innovate and thrive together.